👋 Hi!

I am an HCI PhD student at University of Toronto's DGP Lab, advised by Prof. Tovi Grossman.

Currently, I am working on generative and adaptive UIs to rethink how people use computers to learn, work, and create. Many exciting projects to come, stay tuned!

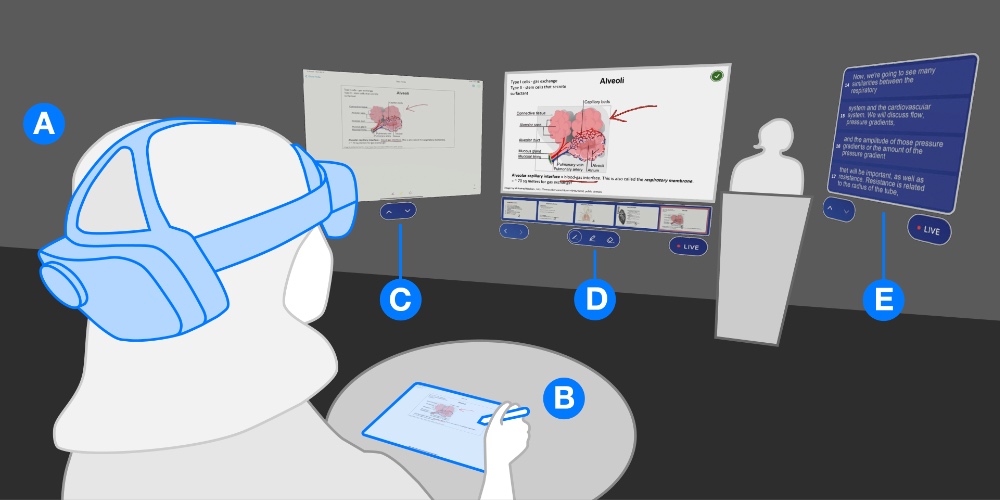

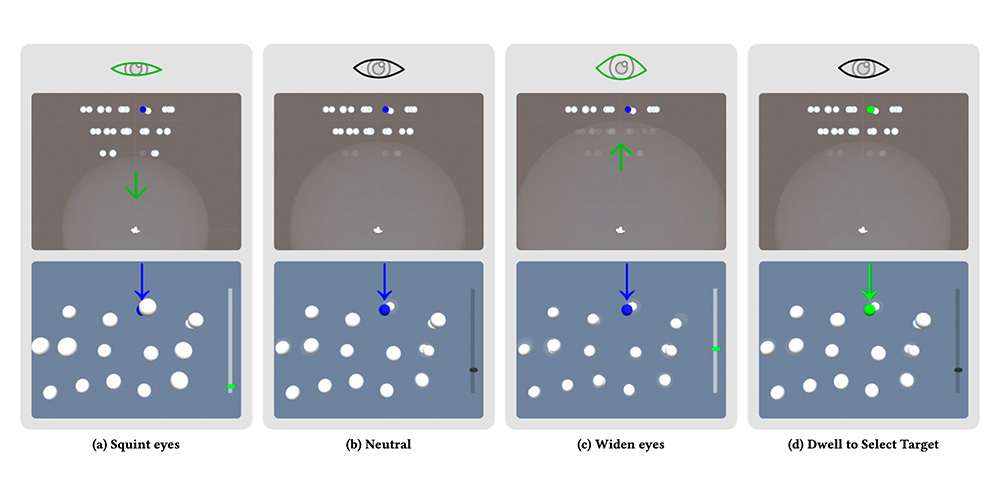

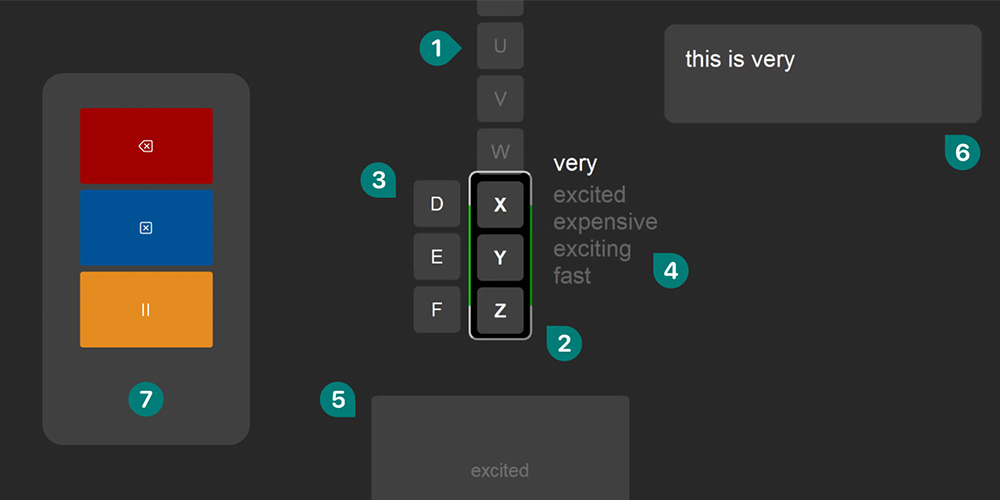

Previously, I worked on extended reality systems for learning and task guidance, as well as novel gaze-based interactions for pointing and accessibility. I received my MSc in Computer Science from University of Toronto and BEng in Computer Science from Tsinghua University. I've worked as a research assistant at Tsinghua Pervasive HCI Group and Stanford HCI Group.